I just pushed updated limare code and a fix to ioquake3.

In almost 160 patches, loads of things change:

As for performance, this is no better or worse than the FOSDEM code. 47fps on timedemo on the Allwinner A10 at 1024x600. But now on the Exynos 4, there are some new numbers... With the CPU clocked to 2GHz and the Mali clocked to 800MHz (!!!) we hit 145fps at 720p and 127fps at 1080p. But more on that a bit further in this post.

Shortly after FOSDEM, i blogged about the 2% performance advantage over the binary driver when running Q3A.

As you might remember, we are using ARMs kernel driver, and despite all the pain that this is causing us due to shifting IOCTL numbers (whoever at ARM decided that IOCTL numbers should be defined as enums should be laid off immediately) I still think that this is a useful strategy. This allows us to immediately throw in the binary driver, and immediately compare Lima to the binary, and either help hard reverse engineering, or just make performance comparisons. Rewriting this kernel driver, or turning this into a fully fledged DRM driver is currently more than just a waste of time, it is actually counterproductive right now.

But now, while bringing up a basic mesa driver, it became clear that I needed to work on some form of memory management. Usually, you have the DRM driver handling all of that, (even for small allocations i think - not that i have checked). We do not have a DRM driver, and I do not intend to write one in the very near future either, and all I have is the big block mapping that the mali kernel driver offers (which is not bad in itself).

So in the train on the way back from linuxtag this year, I wrote up a small binary allocator to divide up the 2GB of address space that the Mali MMU gives us. On top of that, I now have 2 types of memory, sequential and persistent (next to UMP and external, for mapping the destination buffer into Mali memory), and limare can now allocate and map blocks of either at will.

The sequential memory is meant for per-frame data, holding things like draws and varyings and such, stuff that gets thrown away after the frame has been rendered. This simply tracks the amount of memory used, adds the newly requested memory at the end, and returns an address and a pointer. No tracking whatsoever. Very lightweight.

The persistent memory is the standard linked list type, with the overhead that that incurs. But this is ok, as this memory is meant for shaders, textures and attribute and element buffers. You do not create these _every_ draw, and you tend to reuse them, so it's acceptable if their management is a bit less optimized.

Normally, more management makes things worse, but this memory tracking allowed me to sanitize away some frame specific state tracking. Suddenly, Q3A at 720p which originally ran at 145fps on the exynos, ran at 176fps. A full 21% faster. Quite some difference.

I now have a board with a Samsung Exynos 4412 prime. This device has the quad A9s clocked at 1.7GHz, 2GB LP-DDR2 memory at 880MHz, and a quad PP Mali-400MP4 at 440MHz. This is quite the powerhouse compared to the 1GHz single A8 and single PP Mali-400 at 320MHz. Then, this Exynos chip I got actually clocks the A9s to 2GHz and the mali to a whopping 800MHz (81% faster than the base clock). Simply insane.

The trouble with the exynos device, though, is that there are only X11 binaries. This involves a copy of the rendered buffer to the framebuffer which totally kills performance. I cannot properly compare these X11 binaries with my limare code. So I did take my new memory management code to the A10 again, and at 1024x600 it ran the timedemo at 49.5fps. About a 6% margin over the binary framebuffer driver, or tripling my 2% lead at FOSDEM. Not too bad for increased management, right?

Anyway, with the overclocking headroom of the exynos, it was time for a proper round of benchmarking with limare on exynos.

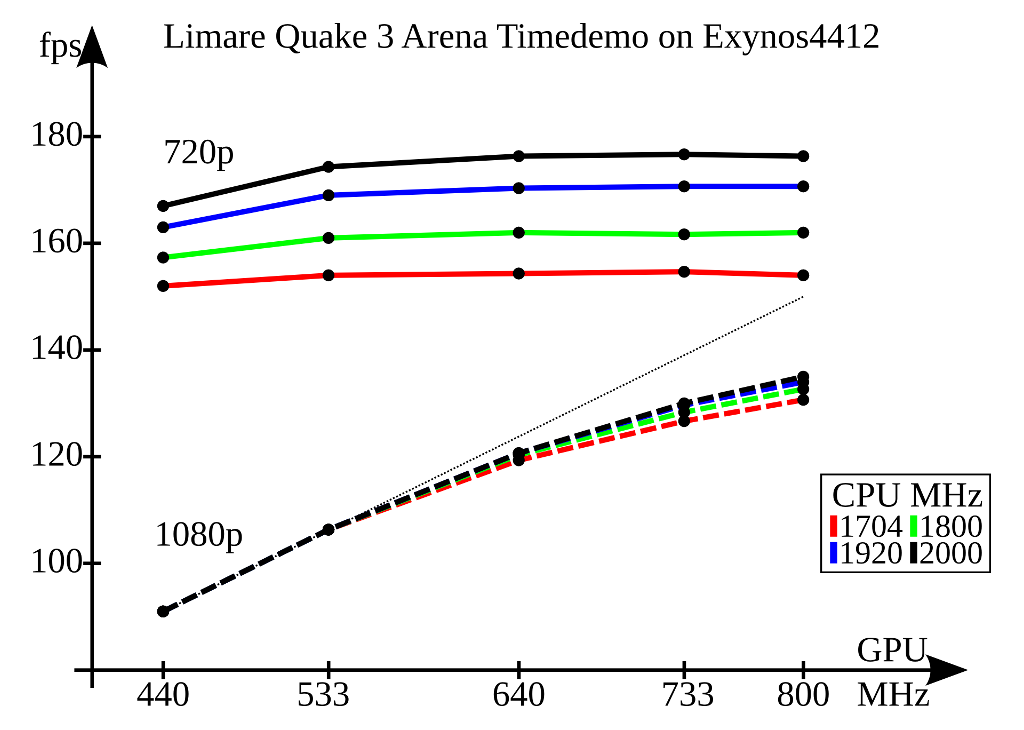

![Limare Q3A benchmark results on exynos4412]()

The above picture, which I quickly threw together manually, maps it out nicely.

Remember, this is an Exynos 4412 prime, with 4 A9s clocked from 1.7-2.0GHz, 2GB LP-DDR2 at 880MHz, and a Mali-400MP4 which clocks from 440MHz to an insane 800MHz. The test is the quake 3 arena timedemo, running on top of limare. Quake 3 Arena is single threaded, so apart from the limare job handling, the other 3 A9 cores simply sit idle. It's sadly the only good test I have, if someone wants to finish the work to port Doom3 to gles, I am sure that many people will really appreciate it.

At 720p, we are fully CPU limited. At some points in the timedemo (as not all scenes put the same load on cpu and/or gpu), the difference in mali clock makes us slightly faster if the cpu can keep up, but this levels out slightly above 533MHz. Everything else is simply scaling linearly with the cpu clock. Every change in cpu clock is a 80% change in framerate. We end up hitting 176.4fps.

At 1080p, it is a different story. 1080p is 2.25 times the amount of screen real estate of 720p (if that number rings a bell, 2.25MB equals two banks of Tseng ET6x00 MDRAM :p). 2.25 times the amount of pixels that need to pushed out. Here clearly the CPU is not the limiting factor. Scaling linearly from the original 91fps at 440MHz is a bit pointless, as the Q3A benchmark is not always stressing CPU and GPU equally over the whole run. I've drawn the continuation of the 440-533MHz increase, and that would lead to 150fps, but instead we run into 135.1fps. I think that we might be stressing the memory subsystem too much. At 135fps, we are pushing over 1GBps out to the framebuffer, this while the display is refreshing at 60fps, so reading in half a gigabyte. And all of this before doing a single texture lookup (of which we have loads).

It is interesting to see the CPU become measurably relevant towards 800MHz. There must be a few frames where the GPU load is such that the faster CPU is making a distinguishable difference. Maybe there is more going on than just memory overload... Maybe in future i will get bored enough to properly implement the mali profiling support of the kernel, so that we can get some actual GP and PP usage information, and not just the time we spent waiting for the kernel job to return.

I have recently learned, from a very reliable source, that ARM management seriously dislikes the Lima driver project.

To put it nicely, they see no advantage in an open source driver for the Mali, and believe that the Lima driver is already revealing way too much of the internals of the Mali hardware. Plus, their stance is that if they really wanted an open source driver, they could simply open up their own codebase, and be done.

Really?

We can debate endlessly about not seeing an advantage to an open source driver for the Mali. In the end ARMs direct customers will decide on that one. I believe that there is already 'a slight bit of' traction for the general concept of open source software, I actually think that a large part of ARMs high margin products depend on that concept right now, and this situation is not going to get any better with ARMv8. Silicon vendors and device makers are also becoming more and more aware of the pain of having to deal with badly integrated code and binary blobs. As Lima becomes more complete, ARMs customers will more and more demand support for the Lima driver from ARM, and ARM gets to repeat that mantra: "We simply do not see the advantage"...

About revealing the internals of the Mali, why would this be an issue? Or, let me rephrase that, what is ARM afraid of?

If they are afraid of IP issues, then the damage was done the second the Mali was poured into silicon and sold. Then the simple fact that ARM is that apprehensive should get IP trolls' mouths watering. Hey IP Trolls! ARM management believes that there are IP issues with the Mali! Here is the rainbow! Start searching for your pot of gold now!

Maybe they are afraid that what is being revealed by the Lima driver is going to help the competition. If that is the case, then it shows that ARM today has very little confidence in the strength of their Mali product or in their own market position. And even if Nvidia or Qualcomm could learn something today, they will only be able to make use of that two years or even further down the line. How exactly is that going to hurt the Mali in the market it is in, where 2 years is an eternity?

If ARM really believes in their Mali product, both in the Mali's competitivity and in the originality of its implementation, then they have no tangible reason to be afraid of revealing anything about its internals.

Then there is the view that ARM could just open source their own driver. Perhaps they could, it really could be that they have had very strict agreements with their partners, and that ARM is free to do what they want with the current Mali codebases. I personally think it is rather unlikely that everything is as watertight as ARM management imagines. And even then, given that they are afraid of IP issues... How certain are ARMs lawyers that nothing contentious slipped into the code over the years? How long will it take ARMs legal department to fully review this code and assess that risk?

The only really feasible solution tends to be a freshly written driver, with a full development history available publically. And if ARM wants to occupy their legal department, then they could try to match intel (AMD started so well, but ATI threw in the towel so quickly, but luckily the AMD GPGPU guys continued part of it), and provide the Technical Reference Manual and other documents to the Mali. That would be much more productive, especially as that will already be more legal overhead than ARM management would be willing to spare, when they do finally end up seeing the light.

So. ARM management hates us. But guess what. Apart from telling us to change our name (there was apparently the "fear" of a trademark issue with us using Remali, so we ended up calling it Lima instead), there was nothing that they could do to stop us a year and a half ago. And there is even less that ARM can do to stop us today :)

A full 6.0%...

In almost 160 patches, loads of things change:

- clean FOSDEM code supporting Q3A timedemo on a limare ioquake3.

- support for r3p2 kernel and binary userspace as found on the odroid-x series.

- multiple PP support, allowing for the full power of the mali 400MP4 to be used.

- fully threaded job handling, so new frames can be set up while the first is getting rendered.

- multiple textures, in rgb888, rgba8888 and rgb565, with mipmapping.

- multiple programs.

- attribute and elements buffer support.

- loads of gl state is now also handled limare style.

- memory access optimized scan pattern (hilbert) for PP (fragment shader).

- direct MBS (mali binary shader) loading for pre-compiled shaders (and OGT shaders!!!).

- support for UMP (arm's in kernel external memory handler).

- Properly centered companion cube (now it is finally spinning in place :))

- X11 egl support for tests.

- ...

As for performance, this is no better or worse than the FOSDEM code. 47fps on timedemo on the Allwinner A10 at 1024x600. But now on the Exynos 4, there are some new numbers... With the CPU clocked to 2GHz and the Mali clocked to 800MHz (!!!) we hit 145fps at 720p and 127fps at 1080p. But more on that a bit further in this post.

Upcoming: Userspace memory management.

Shortly after FOSDEM, i blogged about the 2% performance advantage over the binary driver when running Q3A.

As you might remember, we are using ARMs kernel driver, and despite all the pain that this is causing us due to shifting IOCTL numbers (whoever at ARM decided that IOCTL numbers should be defined as enums should be laid off immediately) I still think that this is a useful strategy. This allows us to immediately throw in the binary driver, and immediately compare Lima to the binary, and either help hard reverse engineering, or just make performance comparisons. Rewriting this kernel driver, or turning this into a fully fledged DRM driver is currently more than just a waste of time, it is actually counterproductive right now.

But now, while bringing up a basic mesa driver, it became clear that I needed to work on some form of memory management. Usually, you have the DRM driver handling all of that, (even for small allocations i think - not that i have checked). We do not have a DRM driver, and I do not intend to write one in the very near future either, and all I have is the big block mapping that the mali kernel driver offers (which is not bad in itself).

So in the train on the way back from linuxtag this year, I wrote up a small binary allocator to divide up the 2GB of address space that the Mali MMU gives us. On top of that, I now have 2 types of memory, sequential and persistent (next to UMP and external, for mapping the destination buffer into Mali memory), and limare can now allocate and map blocks of either at will.

The sequential memory is meant for per-frame data, holding things like draws and varyings and such, stuff that gets thrown away after the frame has been rendered. This simply tracks the amount of memory used, adds the newly requested memory at the end, and returns an address and a pointer. No tracking whatsoever. Very lightweight.

The persistent memory is the standard linked list type, with the overhead that that incurs. But this is ok, as this memory is meant for shaders, textures and attribute and element buffers. You do not create these _every_ draw, and you tend to reuse them, so it's acceptable if their management is a bit less optimized.

Normally, more management makes things worse, but this memory tracking allowed me to sanitize away some frame specific state tracking. Suddenly, Q3A at 720p which originally ran at 145fps on the exynos, ran at 176fps. A full 21% faster. Quite some difference.

I now have a board with a Samsung Exynos 4412 prime. This device has the quad A9s clocked at 1.7GHz, 2GB LP-DDR2 memory at 880MHz, and a quad PP Mali-400MP4 at 440MHz. This is quite the powerhouse compared to the 1GHz single A8 and single PP Mali-400 at 320MHz. Then, this Exynos chip I got actually clocks the A9s to 2GHz and the mali to a whopping 800MHz (81% faster than the base clock). Simply insane.

The trouble with the exynos device, though, is that there are only X11 binaries. This involves a copy of the rendered buffer to the framebuffer which totally kills performance. I cannot properly compare these X11 binaries with my limare code. So I did take my new memory management code to the A10 again, and at 1024x600 it ran the timedemo at 49.5fps. About a 6% margin over the binary framebuffer driver, or tripling my 2% lead at FOSDEM. Not too bad for increased management, right?

Anyway, with the overclocking headroom of the exynos, it was time for a proper round of benchmarking with limare on exynos.

Benchmark, with a pretty picture!

The above picture, which I quickly threw together manually, maps it out nicely.

Remember, this is an Exynos 4412 prime, with 4 A9s clocked from 1.7-2.0GHz, 2GB LP-DDR2 at 880MHz, and a Mali-400MP4 which clocks from 440MHz to an insane 800MHz. The test is the quake 3 arena timedemo, running on top of limare. Quake 3 Arena is single threaded, so apart from the limare job handling, the other 3 A9 cores simply sit idle. It's sadly the only good test I have, if someone wants to finish the work to port Doom3 to gles, I am sure that many people will really appreciate it.

At 720p, we are fully CPU limited. At some points in the timedemo (as not all scenes put the same load on cpu and/or gpu), the difference in mali clock makes us slightly faster if the cpu can keep up, but this levels out slightly above 533MHz. Everything else is simply scaling linearly with the cpu clock. Every change in cpu clock is a 80% change in framerate. We end up hitting 176.4fps.

At 1080p, it is a different story. 1080p is 2.25 times the amount of screen real estate of 720p (if that number rings a bell, 2.25MB equals two banks of Tseng ET6x00 MDRAM :p). 2.25 times the amount of pixels that need to pushed out. Here clearly the CPU is not the limiting factor. Scaling linearly from the original 91fps at 440MHz is a bit pointless, as the Q3A benchmark is not always stressing CPU and GPU equally over the whole run. I've drawn the continuation of the 440-533MHz increase, and that would lead to 150fps, but instead we run into 135.1fps. I think that we might be stressing the memory subsystem too much. At 135fps, we are pushing over 1GBps out to the framebuffer, this while the display is refreshing at 60fps, so reading in half a gigabyte. And all of this before doing a single texture lookup (of which we have loads).

It is interesting to see the CPU become measurably relevant towards 800MHz. There must be a few frames where the GPU load is such that the faster CPU is making a distinguishable difference. Maybe there is more going on than just memory overload... Maybe in future i will get bored enough to properly implement the mali profiling support of the kernel, so that we can get some actual GP and PP usage information, and not just the time we spent waiting for the kernel job to return.

ARM Management and the Lima driver

I have recently learned, from a very reliable source, that ARM management seriously dislikes the Lima driver project.

To put it nicely, they see no advantage in an open source driver for the Mali, and believe that the Lima driver is already revealing way too much of the internals of the Mali hardware. Plus, their stance is that if they really wanted an open source driver, they could simply open up their own codebase, and be done.

Really?

We can debate endlessly about not seeing an advantage to an open source driver for the Mali. In the end ARMs direct customers will decide on that one. I believe that there is already 'a slight bit of' traction for the general concept of open source software, I actually think that a large part of ARMs high margin products depend on that concept right now, and this situation is not going to get any better with ARMv8. Silicon vendors and device makers are also becoming more and more aware of the pain of having to deal with badly integrated code and binary blobs. As Lima becomes more complete, ARMs customers will more and more demand support for the Lima driver from ARM, and ARM gets to repeat that mantra: "We simply do not see the advantage"...

About revealing the internals of the Mali, why would this be an issue? Or, let me rephrase that, what is ARM afraid of?

If they are afraid of IP issues, then the damage was done the second the Mali was poured into silicon and sold. Then the simple fact that ARM is that apprehensive should get IP trolls' mouths watering. Hey IP Trolls! ARM management believes that there are IP issues with the Mali! Here is the rainbow! Start searching for your pot of gold now!

Maybe they are afraid that what is being revealed by the Lima driver is going to help the competition. If that is the case, then it shows that ARM today has very little confidence in the strength of their Mali product or in their own market position. And even if Nvidia or Qualcomm could learn something today, they will only be able to make use of that two years or even further down the line. How exactly is that going to hurt the Mali in the market it is in, where 2 years is an eternity?

If ARM really believes in their Mali product, both in the Mali's competitivity and in the originality of its implementation, then they have no tangible reason to be afraid of revealing anything about its internals.

Then there is the view that ARM could just open source their own driver. Perhaps they could, it really could be that they have had very strict agreements with their partners, and that ARM is free to do what they want with the current Mali codebases. I personally think it is rather unlikely that everything is as watertight as ARM management imagines. And even then, given that they are afraid of IP issues... How certain are ARMs lawyers that nothing contentious slipped into the code over the years? How long will it take ARMs legal department to fully review this code and assess that risk?

The only really feasible solution tends to be a freshly written driver, with a full development history available publically. And if ARM wants to occupy their legal department, then they could try to match intel (AMD started so well, but ATI threw in the towel so quickly, but luckily the AMD GPGPU guys continued part of it), and provide the Technical Reference Manual and other documents to the Mali. That would be much more productive, especially as that will already be more legal overhead than ARM management would be willing to spare, when they do finally end up seeing the light.

So. ARM management hates us. But guess what. Apart from telling us to change our name (there was apparently the "fear" of a trademark issue with us using Remali, so we ended up calling it Lima instead), there was nothing that they could do to stop us a year and a half ago. And there is even less that ARM can do to stop us today :)

A full 6.0%...